Attending a InfraGard event recently, I was made aware of problem that I never gave much thought to before but probably should have - securing the private keys for SSL and SSH certificates. Much like usernames and passwords, public and private keys for certificates to encrypt and authenticate you to various internet services are critically important to manage and protect. I also learned that many people don't rotate their certificates nearly as frequently as recommended (how many of us have GoDaddy certificates that are set to expire for 3 years) and often private private keys are saved in simple file and network shares. Very similar to saving usernames and passwords in an Excel spreadsheet or text file.

Just like a password, to ensure the security of your private key it is best practice to limit access to members of your organization who absolutely need to have control over it. It is also best practice to change your private key (and re-key any associated certificates) if a member of your team who had access to the private key leaves your organization. The challenge is finding these keys and identifying who is possibly using them.

Identifying Private & Public Keys

There are many samples of private keys and certificates that you can download to see the makeup of a particular crt or key file for user and machine authentication. Opening these in a common editor will show the how they are crafted:

Building Intelligence to Indentify Keys & Certificates in File Shares

One of the foundational tenets of DataGravity is to utilize intelligence on unstructured file shares and VMs to determine where sensitive data is being saved and accessed. In this case we will identify and discover private keys/certificates in file shares and VMs with DataGravity's automated detection and intelligence. This can be done using the Intelligence Management interface, allowing us to create a custom tag, attach it to a Discovery Policy, and then find and be alerted on this information.

We can simply give our new tag a name 'Certificates Key' and then a color indicator for importance (Red is the universal sign for 'Very Important') and a Description.

The pattern we will be looking inside the files to identify if they are a certificates or keys is the 'Begin' and 'End' lines for private keys and certificates. The Regex expression that I found useful for this is listed below.

(-----(\bBEGIN\b|\bEND\b) ((\bRSA PRIVATE KEY\b)|(\bCERTIFICATE\b))-----)

As seen when testing for the beginning and ending of these certificates my Match Pattern is working as expected.

Now that I have the tools in place to identify Private Keys and Certificates, I simply need to update my Intelligence Profile to automatically discovery when new certificates and keys are found.

Idenfity, Discover, & Notify

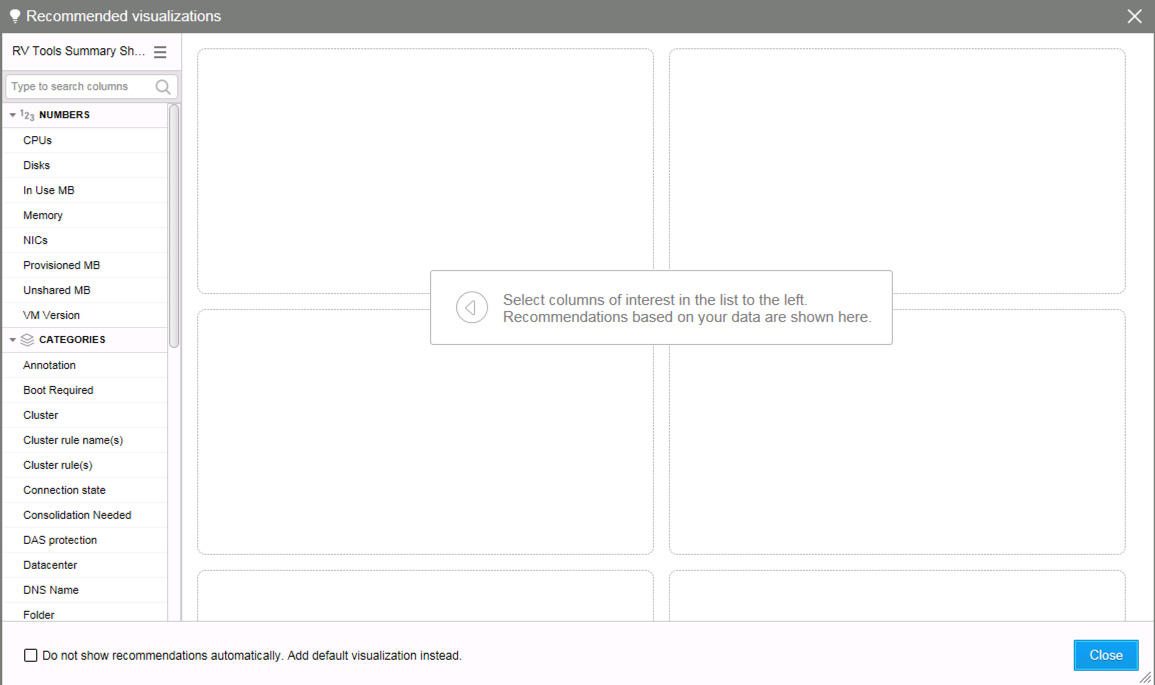

With the new intelligence tag for Certificate Key created and applied to my Intelligence Profile, I can very easily search and discover with DataGravity all instances of those files.

- Search for instances of the newly created tag - Certificates Key

- Indentify the number of Results

- Preview any of the Files to confirm that it is a key or certificate

- You will notice DataGravity also can identify beyond the file extension to find this information. In this case the private key was saves as a Text file, but we can still see by previewing the file that it contains the private key information.

- Export or Subscribe to the Search to be notified when Private Keys or Certificates are saved.

Parity throughout the System

The newly created tags now are accessible through search as well as in all of the key visuals provided by DataGravity including: File Analytics, File Details, Activity Reporting and Trending - across file shares and VMs. Extremely powerful for understanding where this sensitive data lives, who is accessing it, and then notifying other systems for full discovery such as a PKI Key Management system.