In my last post, I provided a simple HOW TO for finding music and video content within file shares, VMs, and datasets. Let's now look at how to define, detect and alert when sensitive data is stored.

detecting Sensitive information (Credit Card, SSN, PII/PHI)

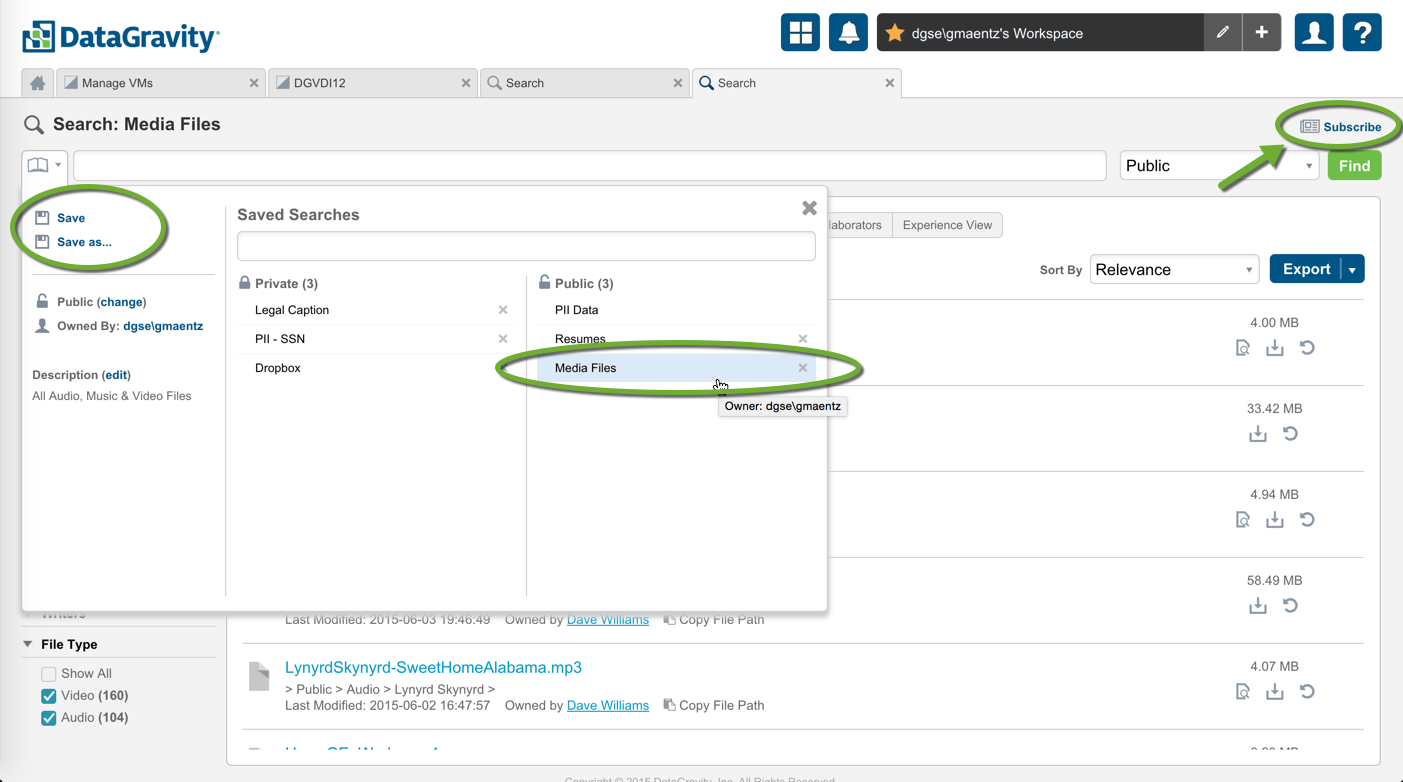

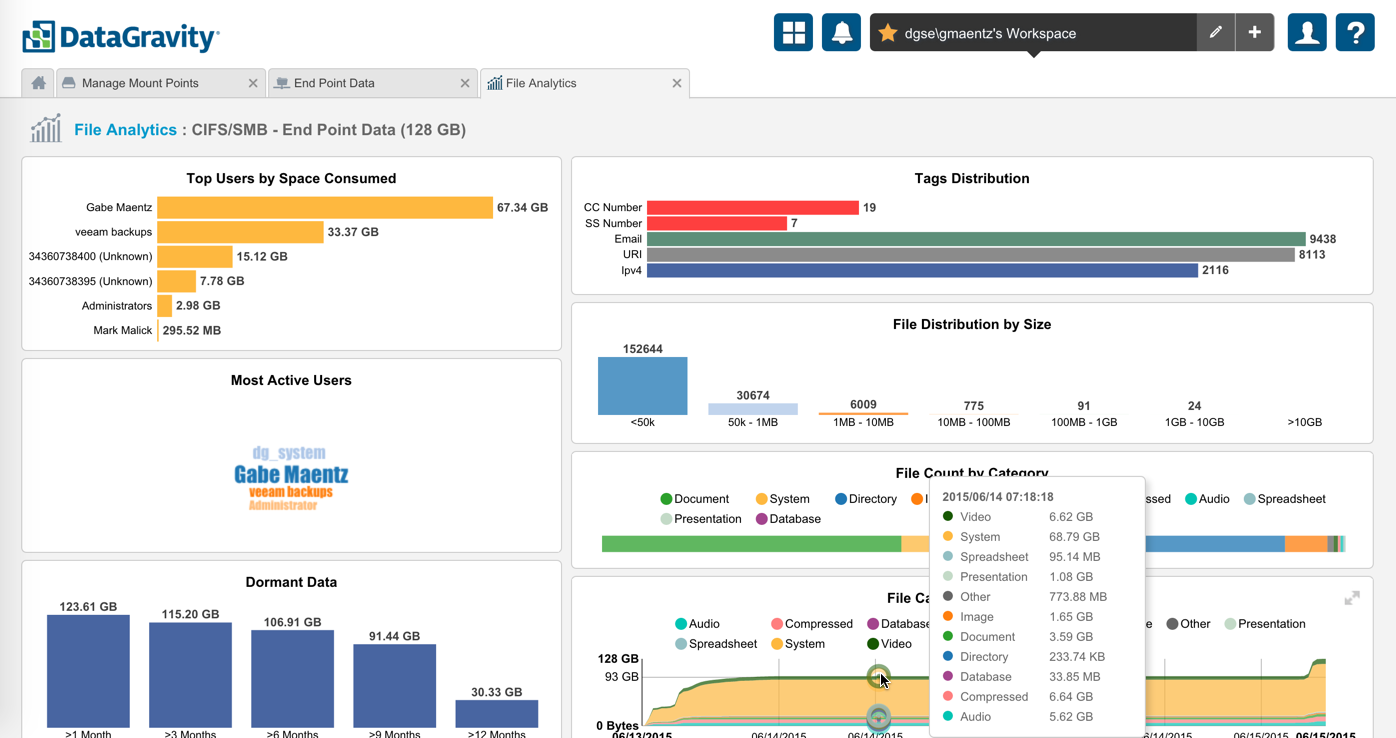

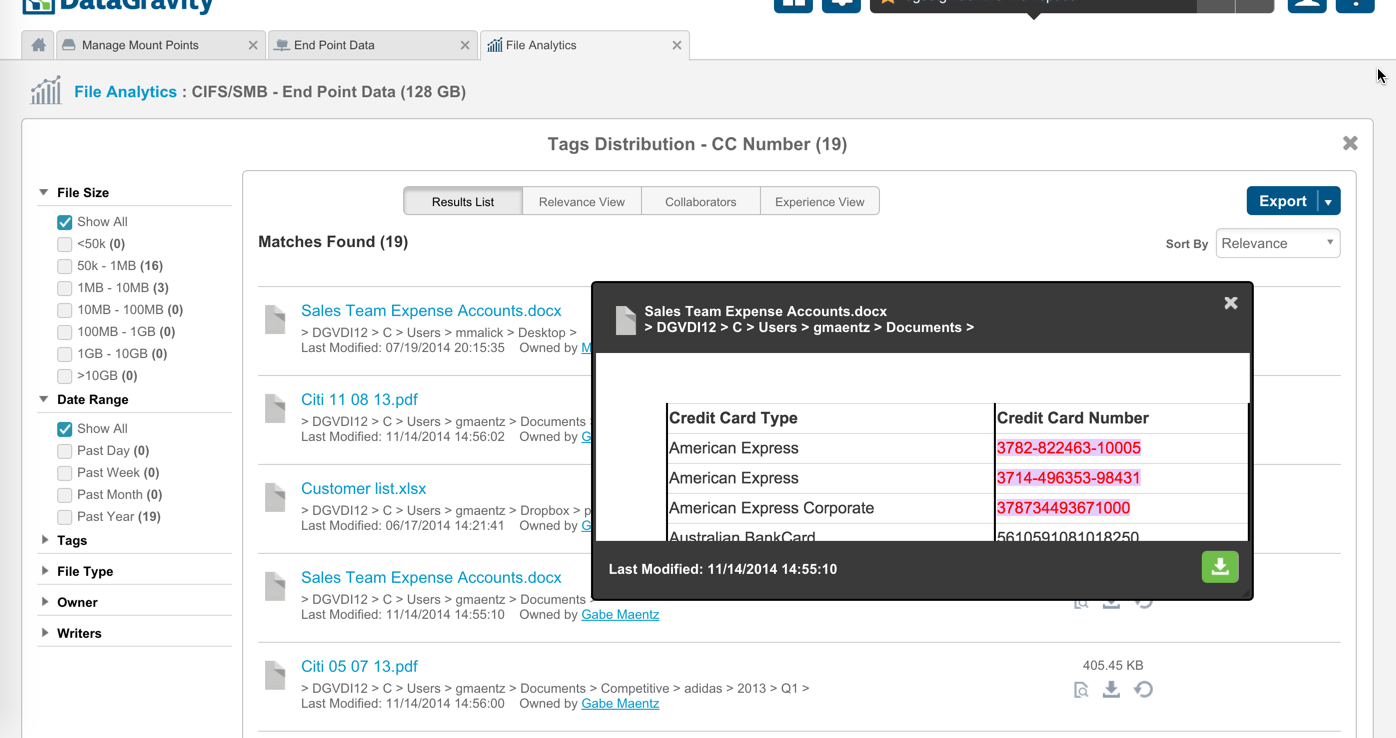

DataGravity extends the ability to detect and search by keyword and/or metadata tags for information that is sensitive. There are and number of pre-defined tags that the system can easily find within all files on shares or within VMs, but also supports the ability to define and create your own custom tags. Examples of these sensitive tags are Social Security Numbers and Credit Card Numbers. These can be applied directly to any search as shown below where we are looking for Social Security Numbers on the Public share.

It looks like we found 7 matches where files contain Social Security numbers, which is validated by performing a preview of the file (directly from the search results).

I could of course could modify my search to include other sensitive tags as well as shown below.

Subscription and Email notification

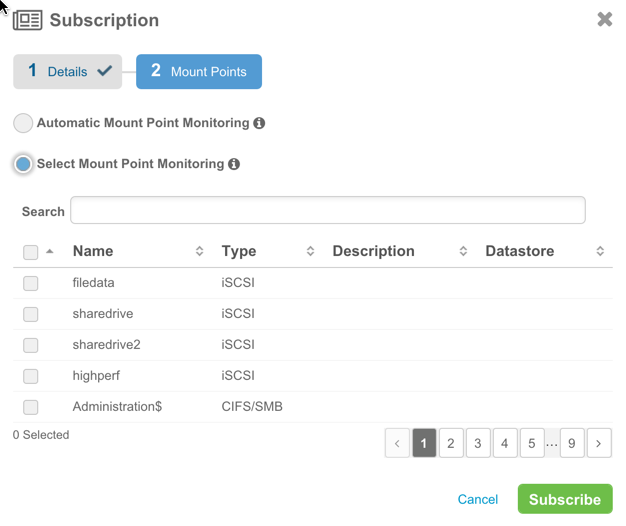

Now having a search defined for detecting sensitive data, we want to be notified when this type of information is found within the environment. Subscriptions and Content Alerts are both new features of DataGravity V2 and facilitate proactive notification for when sensitive content is found. Subscriptions allow us to specify a frequency with which to run the sensitive data search and be alerted via email. We can search for sensitive data across the entire system, or monitor specific shares or VMs.

The email provides us with a link to view the search results and details specific to each share of VM.

Logging & alerting

If we wish to log and forward any details surrounding sensitive data which has been identified, we can create a Content Alert.

Content Alerts allow us to specify and forward system level events to be notified when sensitive data is found. These alerts can be given a specific syslog event level which then are forwarded to the centralized log aggregation, security information and event management (SIEM) infrastructure.

Similar to the email notifications provided using subscriptions, content alerts will contain a link to view the search results and details specific to each share or VM.