In the series of Terraform posts we have shown how to effectively utilize Infrastructure as Code to build, deploy, scale, monitor and destroy a fleet of infrastructure across multiple regions in AWS. The beauty of Terraform is that while we may have used it to build out infrastructure in AWS, we can also extend it’s use to other cloud providers as well. As I see more and more organizations adopting a multi-cloud strategy, let’s take a look at what it would take to deploy our fleet into Azure.

Azure Specifics

If you are familiar with AWS, Azure provides many similar services and features. The Azure Terraform provider is used to interact with many of the Azure resources supported by Azure Resource Manager (AzureRM). A brief overview of the Azure resources will will utilize to move our fleet to Azure are:

Azure Authentication: Terraform supports authenticating to Azure through a Service Principal or the Azure CLI. A Service Principal is an application within Azure Active Directory whose authentication tokens can be used as the client_id, client_secret, and tenant_id fields needed by Terraform. Full details to create a service principle are well documented on the Terraform website.

Resource Group: Azure holds related resources for a given solution in a logical container called a Resource Group. You cannot deploy resources into Azure without assigning them to a Resource Group which we will create and manage via the Terraform Azure provider.

Virtual Network: Akin to a AWS VPC, Azure’s Virtual Network provides an isolated, private environment in the cloud. It is here where we will define our IP address range, subnets route tables and network gateways. This build will utilize the Azure network module maintained in the Terraform module registry.

Scalability: In order to scale our fleet to the appropriate size, Azure provides Azure’s Virtual Machine Scaling Set (VMSS). AWSS is similar to AWS Auto Scaling allowing us to create and manage a group of identical, load balanced, and autoscaling VMs. The fleet will be front ended by a load balancer so that we can grow/shrink without disruption and will utilize VMSS module up on my GitHub terraform_azure repository.

Deploy to Azure

For our initial Azure deployment will will create a new set of Terraform files, including a new main.tf to tie together the details of the Azure provider, modules and specifics for how we want to the fleet built. Inside the file we have declared our connection to Azure, the resource group to build, the virtual network details as well as the web server cluster. The VMSS module referenced also builds a jump server/bastion server in the event that you need to connect to the environment to do some troubleshooting. I have specified my Azure credentials as environment variables so that they are not included in this file.

All files to create this fleet in Azure including the main.tf, output.tf and VMSS module are available in the terraform_azure repository of my GitHub account.

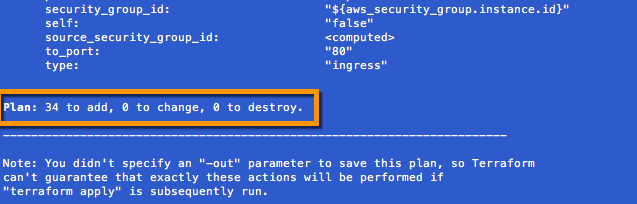

We can initialize, plan and apply our deployment using Terraform and immediately see our Azure resources being built out through the Azure Portal and inside our devtest resource group.

Once the deployment is complete, we browse to the DNS name assigned to to the load balancer front ending our Azure VMSS group. This address displayed at the end of the Terraform output, as we included an output.tf file to list relevant information.

Browsing to the DNS name we can validate our deployment is now completed. At this time we can check the health of the deployment - remember there is a jump server that is accessible if needed.

Once you are happy with the state of the new fleet in Azure it can be torn down with a terraform destroy. I recommend doing this as we prepare for the next step in the series: Moving the Fleet from AWS to Azure.

This is part of a Terraform series in which we have covered: