In the last two posts we have been working with Terraform to automate the buildout of our Virtual Private Cloud (VPC) in AWS and deploy a fleet of scalable web infrastructure. Our next step is to complete the infrastructure build so that it is identical across our development/test, staging & production environments. Each of these environments reside in different regions: development (us-west-2), staging (us-east-1) and production (us-east-2).

File Layout

In the case of deploying our development environment, our work is really close to being complete. We already have a working set of code that deploys into the us-west-2 region, which is contained in the main.tf and outputs.tf files. We will add one step, and that is to create a folder structure that allows our code to easily be managed and referenced. At the top level we create a separate folder for each of our environments, and within each of these folders a sub-folder specifying the type of fleet we are deploying . In the case of our development web server deployment, the folder structure is simple and looks like this:

. ├── dev │ └── webservers │ ├── main.tf │ ├── outputs.tfThere are a number of benefits with laying out our files in this manner - including isolation, re-use and managing state - which Yevgeniy Brikman does an excellent job of describing. As Brikman indicates - "This file layout makes it easy to browse the code and understand exactly what components are deployed in each environment. It also provides a good amount of isolation between environments and between components within an environment, ensuring that if something goes wrong, the damage is contained as much as possible to just one small part of your entire infrastructure."

Deploy Development

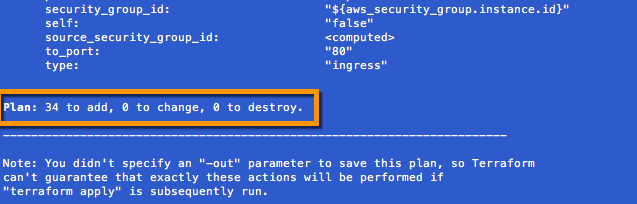

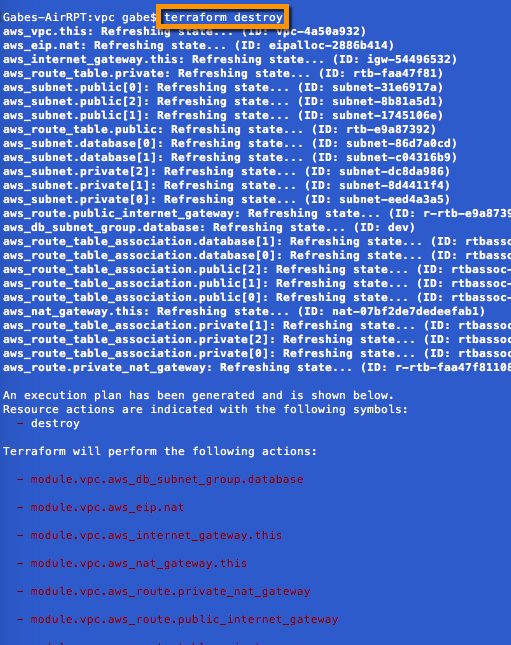

Now that our file layout is the way we want it, let's deploy development. Very simple, and something we have done a few times now. Since we moved our two files into the a new folder location, we will need to initialize the deployment (terraform init), plan it (terraform plan) and finally deploy (terraform apply).

Once complete we can browse over to see the development deployment.

Deploy Staging

One of the most powerful benefits of deploying our infrastructure as code in a modular way is reusability. In fact, to build out our staging environment is only a matter of a couple of steps. First we will create a staging folder in which to store our files, and then we will copy over our main.tf and output.tf files. We will then make a few edits to the main.tf including the following updates: region, IP address space, tags, ami, cluster name, cluster size and key_names. Looking at the differences between development and staging is as simple as running a compare between the main.tf files in the dev and staging folders. The differences are highlighted below:

Once we are happy with the updates, the sequence to deploy is exactly what we are use to. This time we will run our initialize, plan and deployment from within the staging folder. Once complete we can browse over to see the staging deployment.

Production Deployment

Our production environment will mimic our staging environment with only a few edits including deployment to the us-east-2 region, and will start with 8 webservers in the fleet and scale as needed. Once again leveraging infrastructure as code we will simply copy the main.tf and output.tf files out of staging and make our edits. The differences between staging and production are highlighted below:

Now we use the power of Terraform to deploy, and Viola...production is LIVE.

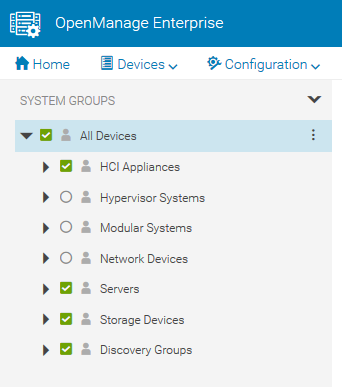

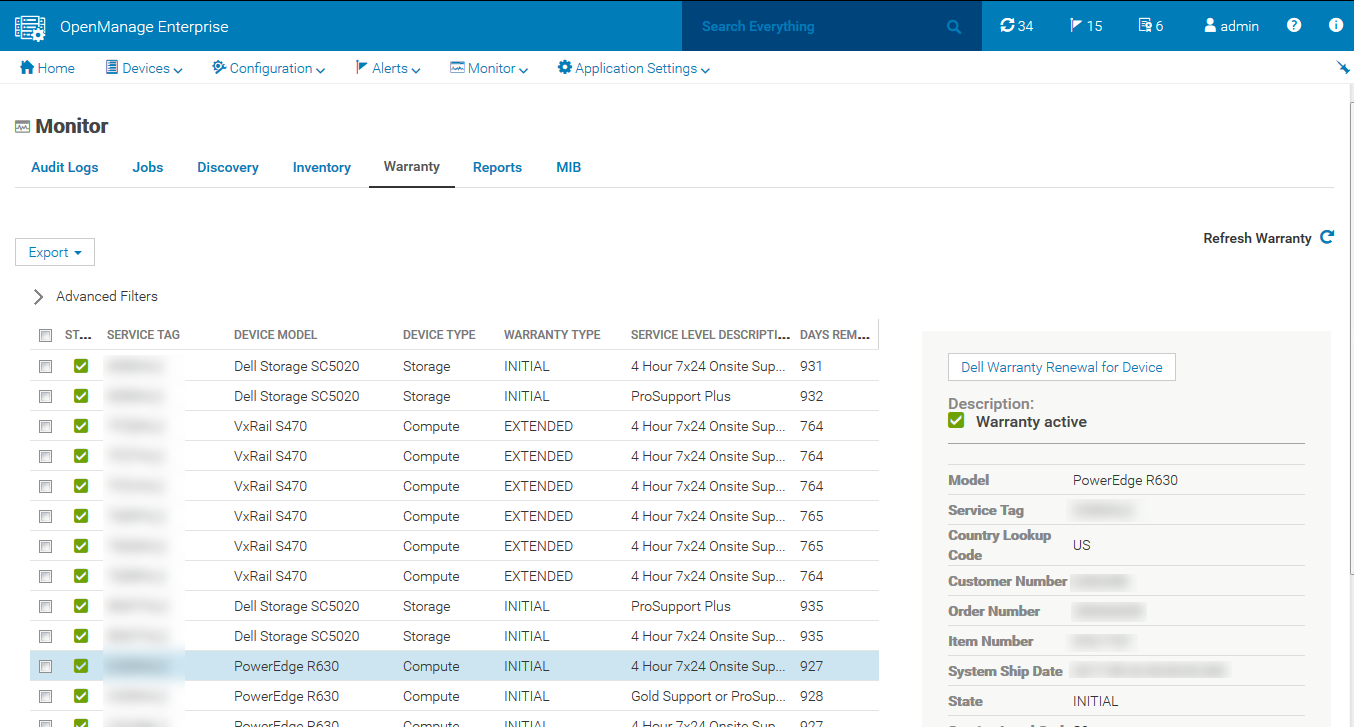

Visualizing Our Deployment

Now that our 3 deployments are complete, we can see the folder structure that has been build out maintaining a separate state for each environment.

To document and visualize our build out, I like to use hava.io which builds architecture diagrams for AWS and Azure environments. As you can see all three environments are active and we can drill into any of them to see the details, including pricing estimates - production ($115/month), staging ($91/month), dev ($72/month).

Mission Complete

Our mission was to create and deploy a set of auto-scaling web servers, front ended by a load balancer for our development, staging and production environments across 3 different AWS regions. Through the power of infrastructure as code we utilized Terraform to define, plan and automate a consistent set of deployments. Mission Complete.

Terraform Series

This is part of a Terraform series in which we cover: