Next week I will be attending my fifth VMworld, and have had the fortune of seeing the conference through the eyes of a customer, partner, reseller and blogger. Last week I received news that VMware would be sponsoring me with a blogger pass to attend this year's show for which I am most grateful. I find that VMworld is the most valuable for me when I enter with a plan. I would encourage others - especially first timers - to build out a plan for what the want to get out of the conference.

My blogging and professional development for VMworld 2018 will focus on the areas of automation and orchestration within the VMware ecosystem and across heterogeneous clouds. I am most interested in further developing deeper product and tooling expertise, while hearing first hand from businesses and community members how they are refining their skill sets to keep up with the demands placed upon them in a multi-cloud world.

vmware {code} Hackathon 2018

One of the events I am most looking forward to this year is the Hackathon. As a participant two years ago, it is exciting to see the level of attention and interest for this year's event. This event highlights the creativity, diversity, vulnerability and genuine 'can do' attitude that I have come to love in the VMware community. Want to get out of your comfort zone and hang with some tremendous people - the Hackathon is for you. I am not yet formally on a team (remember, I just found out I would be attending the conference last week), but I still have time. Some of the teams I plan to keep an close eye on are:

- vMafia 2.0 - Building a vSphere Client Plugin to run PowerShell / PowerCLI within the new HTML5 web console.

- comdivisin - automation around vRO, PowerCLI

- VDM-Hackers - creators of VirtualDesignMaster.io, this team is going to set big goals and do great (fun) things

- Pythonic - build a bolt-on user interface to Vault to make it easy for homelab users to get started with secrets management.

- View API & PS - improve the vmware.hv.helper module by adding new functions or improving them and if time allows to extend the vCheck for Horizon View

- vmug-cloud-labs - nested lab builds within VMware Cloud on AWS.

- PS API Gateway - Building a PowerShell API gateway to interact with all VMware services in a simple standard format.

Tech Field Day - Extra

At VMworld 2015, I had my first in person Tech Field Day experience as a presenter. The Tech Field Day events bring together innovative IT product vendors and independent thought leaders to share information and opinions in a presentation and discussion format. Since then I have been fortunate to be a delegate to several Tech Field Day events. Tuesday afternoon of VMworld 2018, I will again be joining the delegate ranks to engage with what look to be like three interesting companies. I know very little about each of them and excited to learn more.

- JetStream Software - The JetStream Software platform empowers infrastructure spanning multiple data centers and cloud services. Applications enjoy cloud-scale elasticity, and can migrate seamlessly, all with enterprise grade performance, availability and resilience.

- Quali - Blueprint, model, and deploy on-demand, self-service, sandbox environments for labs, data centers, and hybrid cloud.

- Runecast - Proactively use VMware KBs, best practices and security hardening guidelines to protect your environment

These sessions will be live streamed, with the recordings and coverage made available on the Tech Field Day website.

Break out Sessions

Signing up for the conference late does come with some disadvantages, and one of those is getting into popular sessions. None the less there are some great sessions which I plan to take in first hand, and the others I a plan to view online when they become available.

Culture Shift: The Heavy Lifting Behind Digital Transformation [LDT1895BU]

Monday, August 27, 11:30 AM - 12:30 PM | Lagoon D, Level 2

Introduction to Cloud Native App Development on K8s (Maker Space Workshop) [CODE5627U]

Monday, August 27, 01:00 PM - 02:50 PM

Deep Dive: How Fannie Mae Releases vRealize Content Using Lifecycle Manager [MGT2174BU]

Wednesday, August 29, 09:30 AM - 10:30

Instrumenting all things Kubernetes (Maker Space Workshop) [CODE5633U]

Wednesday, August 29, 01:00 PM - 02:50

Top 5 Processes to Accelerate DevOps with Josh Miller [MTE5074U]

Wednesday, August 29, 03:15 PM - 04:00

Destination Digital: Plot a Course for Transformation with Cloud Migration [LDT2207BU]

Thursday, August 30, 10:30 AM - 11:30 AM

Infrastructure as Code for the Enterprise [CODE5570U]

Thursday, August 30, 12:15 PM - 12:45 PM

Infrastructure as Code(IaC) [VMTN5615U]

Thursday, August 30, 01:45 PM - 02:00 PM

vExpert Session - Mystery CLOUD Session 1 - Tuesday August 28th, 4:15pm-5:15pm Mystery AUTOMATION Session 2 - Wednesday August 29th, 5:15pm-6:15pm

vBrownBag

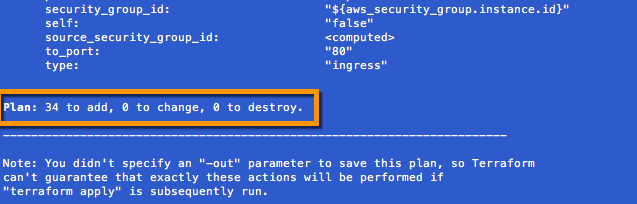

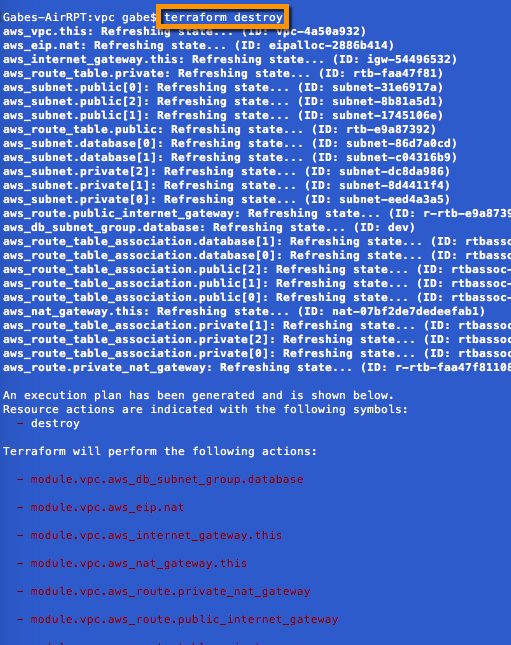

Schedule and time permitting, I am prepared to present a vBrownBag TechTalk on my recent work with Terraform on AWS and VMware. I have found that the vBrownBag stage and surrounding area in the Hang Space is a great place to meet and engage with other attendees and community members. If you would like to connect this is usually the place to find me.

Community, Parties and Events

Of course one of the best things about VMWorld is to interact with the vCommunity, and this community likes to have a good time. Unfortunately I will not be able to attend vBreakfast, Open Acts or VMunderground this year because I don't arrive until Monday of the show. You should definitely check out these great community events, as well as the full list of other parties. Rumor is that some of my favorites including Snoop Dogg, Run DMC & the Roots will be in attendance. I am traveling with some first time Las Vegas goers, so hope to spend some time enjoying the sites and sounds with them. At this point I am confirmed to be at both the vExpert party and vSoccer events on Tuesday night, but if history is an indicator I will find my way to several others.

Contact Info

Of course, if you are attending the show I would love to connect with you schedules permitting. Hit me up on twitter @gmaentz or via email at gabe@maentz.net . I will be heading back home on Thursday evening.